Is there a work around for "cell data size exceed limit"?

Hi Hugo thanks for reaching out:) The team is working on this, but unfortunately at the moment there is no workaround. As a potential workaround, you could try to scrape the LI jobs using clay.Scrape LinkedIn Jobs: https://www.clay.com/learn/how-to-scrape-linkedin-jobsHope this helps:)

yeah to feed it to GPT to get a JSON output, is that possible you think ?

this fixed it idd!

Great question, Hugo. I couldn't see the table anymore but maybe chatGPT was trying to read the first available job post only, you can give it the entire list of jobs or each individual job description field from a list.That way it can have context for all + wait on more jobs to load

Hey! How can I find the website's that are missing from my list? Perhaps using LinkedIn enrichment?

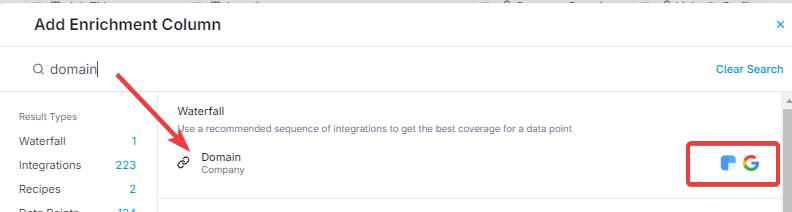

Hey!! there are several options you can try here but if you have the company's LinkedIn URL, that may help for sure. Otherwise, if you only have the Company name, then you can use Clearbit or Google... Example of domain waterfall:

And you can give it the condition to only run if the other column you have is empty to save on credits, if it helps